e-rhic-ir-l AT lists.bnl.gov

Subject: E-rhic-ir-l mailing list

List archive

- From: "Palmer, Robert" <palmer AT bnl.gov>

- To: "Aschenauer, Elke" <elke AT bnl.gov>

- Cc: "E-rhic-ir-l AT lists.bnl.gov" <E-rhic-ir-l AT lists.bnl.gov>

- Subject: Re: [E-rhic-ir-l] Discussion of parameter choices

- Date: Thu, 9 Mar 2017 19:43:24 +0000

|

Great. You are indeed superfast and I am happy that we are pretty much on the same page. Some points you bring up:

1. Re inverse cross sections: I see the use of inverse cross sections as reflections of what we can do in some as yet undefined time. But with twice the luminosity we get twice the data in the same time. Thus the measure of Luminosity times efficiency is the right comparative measure of what we can expect to do.

2. Re lowering dp/p: It would be nice to perform the Fourier transform with efficiencies based on Richards results with, or without, the assumption of lower momentum spread (1/2), raising the R3/R4 efficiencies by 2.4 and reducing luminosity to 0.66. Of course interpreting the resultant structure shape errors will not be unambiguous, depending on what aspect we are most interested in.

3. Re divergence effects: Would you guys be able to redo a Fourier transformation with added pt errors from larger divergences? The errors will, of course be much worse at low pt than high and could be better corrected for with greater statistics, but a first stab would be to just insert them, do the transform, and see what effect they have. This might be instructive. Again one would want to do it for at least two cases: a) randomly scatter the pts corresponding to an average divergence A with the same statistics A, and b) randomly scatter them by a larger assuming a larger ave divergence B using better statistics corresponding to luminosity B, where the luminosity (inverse cross sections) are proportional to divergence^2).

Ave divergence Inverse cross section A 100 murad 10 fb^-1 B 200 murad 40 fb^-1 C 316 murad 100 fb^-1

I am deliberately changing divergences in both x and y since the physics is xy independent. I am suggesting you do this with fixed efficiencies, say 10% below .4 GeV/c and 50% above 0.4 GeV/c, to keep the two effects separate.

Bob

From: Aschenauer Elke-Caroline [mailto:elke AT bnl.gov]

On Mar 9, 2017, at 11:38, Palmer, Robert <palmer AT bnl.gov> wrote:

Dear Bob.

we agree

again we agree

yes, not for the fourier transform, but for acceptance corrections and so on you get a large systematics as you measure only a small part of the total phase space. Also one thing we did not study is if the functional shape is not exponential but dipole like if the effect is the same.

yes, this is true.

yes, this is most likely true.

yes, to have a good accuracy at high pt you need more luminosity to compensate the fall off of the cross section.

yes, the spectrometer is not included, because with the bending right now it would not really work. Also we need to really work out what such a spectrometer would be, what technology and so on. This needs some thinking.

Bob, we did not assume a peak luminosity for the studies, we use total integrated lumi, which depending on what the peak/average lumi of the machine is takes shorter or longer to accumulate. This is the 10 fb^-1 vs 1fb^-1 nothing else.

Bob, I cannot follow this, In a study we would do we would simulate an integrated lumi of 1fb^-1 and would weight this with the acceptance. So higher lumi either better uncertainties or less needed running time. Also please keep in mind there is a part of the imaging program (the one needing polarisation) which requires 100 fb^-1 integrated lumi.

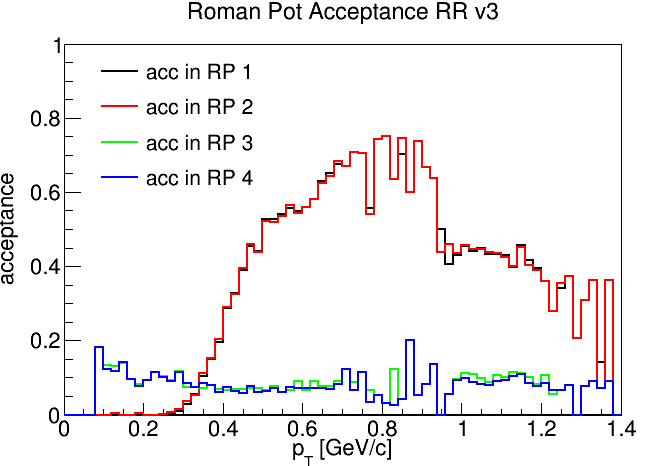

Yes, as usual Rich was super fast and attached is the plot with 1/2 of beam spread.

yes, we have indeed developed a lot of tools which allow us to quickly study impacts.

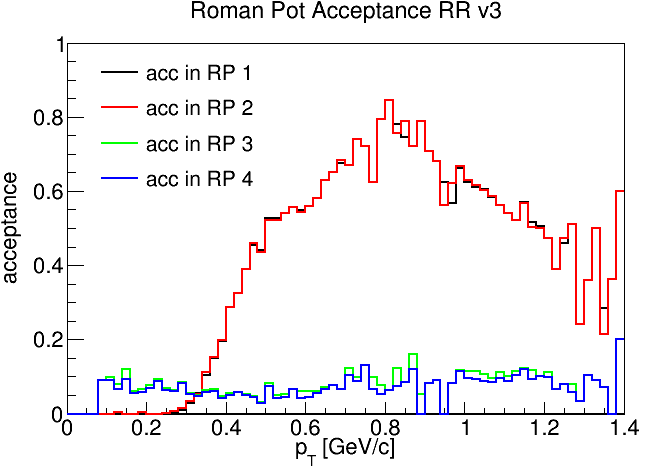

Here is an other one from Rich the increase of the magnet acceptance by 10% It helps quite a bit at high pt.

This one I need to think more about, and indeed we don't need all answers by the 2nd of April.

Cheers

Rich and Elke

( `,_' )+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+= ) `\ - / '. | + | `, Elke-Caroline Aschenauer = \,_ `-/ - ,&&&&&V Brookhaven National Lab + ,&&&&&&&&: Physics Dept., 25 Corona Road = ,&&&&&&&&&&; Bldg. 510 /2-195 Rocky Point, NY, - | |&&&&&&&;\ 20 Pennsylvania Avenue 11778 + | | :_) _ Upton, NY 11973 = | | ;--' | Tel.: 001-631-344-4769 Tel.: 001-631-569-4290 - '--' `-.--. | Cell: 001-757-256-5224 + \_ | |---' = `-._\__/ Mail: elke AT bnl.gov elke.caroline AT me.com - =-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=-+=

|

-

Re: [E-rhic-ir-l] Discussion of parameter choices,

Palmer, Robert, 03/09/2017

-

Re: [E-rhic-ir-l] Discussion of parameter choices,

Aschenauer Elke-Caroline, 03/09/2017

- Re: [E-rhic-ir-l] Discussion of parameter choices, Palmer, Robert, 03/09/2017

- <Possible follow-up(s)>

-

Re: [E-rhic-ir-l] Discussion of parameter choices,

Alexander Kiselev, 03/10/2017

- Re: [E-rhic-ir-l] Discussion of parameter choices, Palmer, Robert, 03/13/2017

- Re: [E-rhic-ir-l] Discussion of parameter choices, Palmer, Robert, 03/20/2017

-

Re: [E-rhic-ir-l] Discussion of parameter choices,

Palmer, Robert, 03/30/2017

-

Re: [E-rhic-ir-l] Discussion of parameter choices,

Alexander Kiselev, 03/30/2017

- Re: [E-rhic-ir-l] Discussion of parameter choices, Alexander Bazilevsky, 03/31/2017

-

Re: [E-rhic-ir-l] Discussion of parameter choices,

Alexander Kiselev, 03/30/2017

-

Re: [E-rhic-ir-l] Discussion of parameter choices,

Aschenauer Elke-Caroline, 03/09/2017

Archive powered by MHonArc 2.6.24.